Prase science on reddit.com v0.1

Posted on 2018 / 08 / 01 in education

This work is devoted to parsing the site reddit.com, section - science to create a database of posts. The main purpose of this work is to accumulate information. The obtained information can be further used for semantic analysis and practice in Big Data. The goal includes a set of tasks:

- to choose necessary libraries;

- to find addresses for parsing and understand its logic;

- to create a function for parsing the necessary information;

- to organize accumulation of information;

- offer a way of processing the received information and any useful result.

This theme is actual because of global distribution of algorithms of accumulation and analysis of big data. The received information can be used for monitoring of actual directions of a science and simply to learn English on the most widespread words (to expand the vocabulary). And besides, simply as a tool for personal development.

|

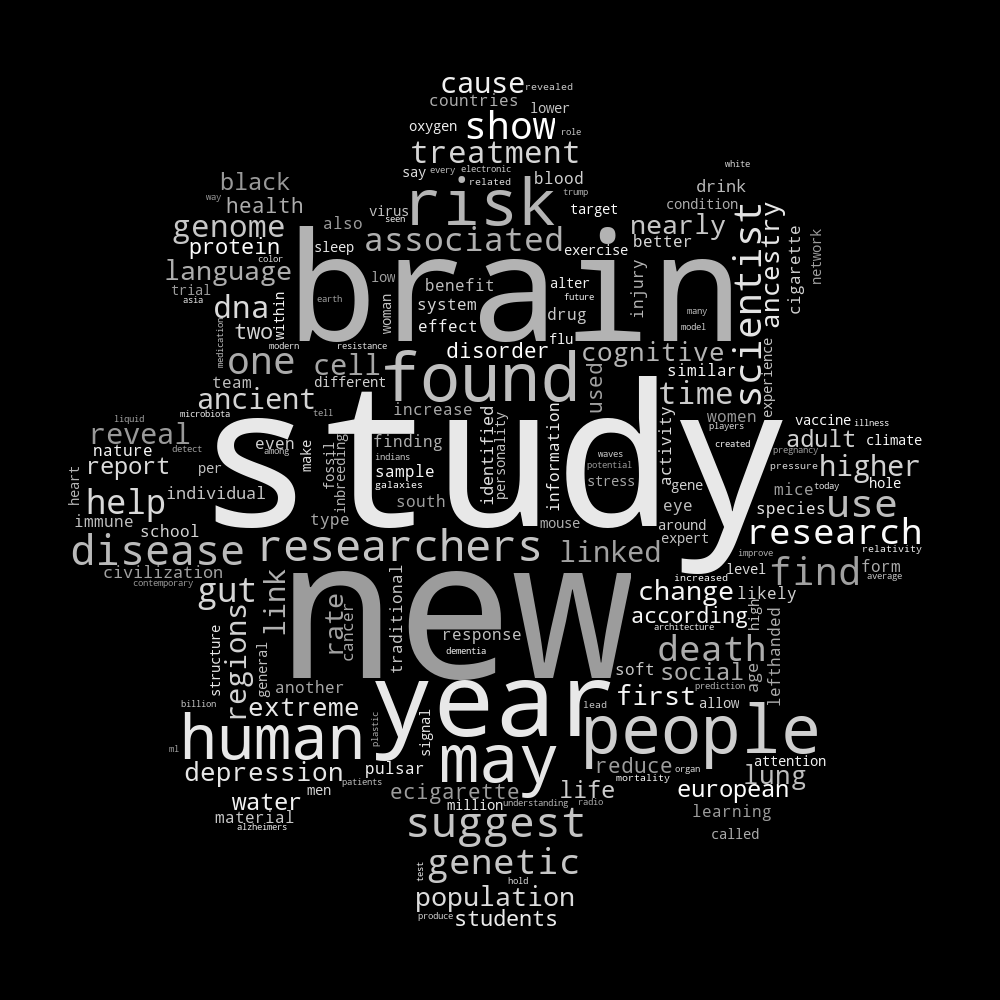

Fig. 1. Example of script implementation. |

1. Development environment and libraries

The main stage of such work is to create the development environment and the right settings. However, this topic is quite extensive and, more importantly, it is strictly individual for each individual. My experiences are using python and the development environment PyCharm IDE or Jupyter notebook. To write notes - editor atom. Although in the end, the working environment can be a usual notebook with a command line.

The basis for this work is interesting and useful articles: 1, 2 and of course Google.

I will denote some more features of this work:

- the code was written, tested and run in Ubuntu 16.04 x64;

- the code is now used in Arch Linux and has been upgraded for python 3.8;

- Python 2.7 and Firefox Quantum 58.0.1 (64 bit) are used.

At the beginning of each chapter you will find the code with comments. Some explanations also will be provided if necessary. Thus, let's get down to business.

The necessary libraries:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import requests

import json

from fake_useragent import UserAgent # user camouflage

import pandas as pd # our "database"

from PIL import Image # for correct saving the image

from wordcloud import WordCloud # for word cloud creation

import numpy as np

from os import path

import pymorphy2 # for words normalization

from nltk.corpus import stopwords #

from collections import Counter

import time

import re

import random

This is a kind of "equipment and reagent preparation". The main objects of the research are: the site and the data set (for which we need the request, json and fake_user libraries). As a useful action it is proposed to build cloud of the most frequently occurring words - for this purpose you also need python libraries (wordcloud, numpy), including for work with text (nltk, collection, pymorphy). Logic of work: you need to get information from the site (parsing), process it, add it to the database, save the database on disk, calculate the frequency of words in the database, build a cloud of words (useful result).

It should be pointed out that this code can be run directly from the command line without specifying an interpreter (#!/usr/bin/env python). The second part of the header is necessary for correct work with the utf-8 and unicode (# -*- coding: utf-8 -*-).

2. Parsing the site

We selected the object of study and prepared everything necessary for our experimental work (section 1). Now we need to deal with the ideology of receiving and downloading data. One of the most obvious and universal ways is direct parsing of the html code of a page. You need to specify the address of the page itself and get its html code. However, this way gives us only visible information. This is usually enough, but it is often useful to read about additional site features.

Having searched a bit on the Internet, we cam find the article 2. In this work, an interesting way of loading reddit pages in json format is specified. It is very convenient for further processing.

Let's check it in practice. Let's try to open a page of reddit in json format in a browser (for example, https://www.reddit.com/r/science/new/.json). Let's pay attention to clearer structure and availability of additional information about news located on the page. Thus, it makes sense to choose the most informative approach and parse json versions of pages.

For the beginning. Download data:

# For all news

def read_js(req):

data = None

try:

data = json.loads(req.text)

except ValueError:

print 'error in js'

# TODO: if rise this error — json hame a errors itself, need to hand-parsing

return data

def collect_news(num_news=100):

url = 'https://www.reddit.com/r/science/new/.json'

data_all = []

while len(data_all) < num_news:

time.sleep(2)

url = 'https://www.reddit.com/r/science/new/.json'

if len(data_all) != 0:

last = data_all[-1]['data']['name']

url = 'https://www.reddit.com/r/science/new/.json?after=' + str(last)

req = requests.get(url, headers={'User-Agent': UserAgent().chrome})

json_data = read_js(req)

data_all += json_data['data']['children']

keys_all = data_all[-1]['data'].keys()

tidy_data = {}

for k in keys_all:

tidy_data[k] = []

for d in data_all:

data = d['data']

for k in tidy_data:

if k not in data:

tidy_data[k] += [None]

else:

tidy_data[k] += [data[k]]

return tidy_data

num_post = 200

tidy_data = collect_news(num_post)

# print "raw new data len: ", len(tidy_data['title'])

In small projects, we will try to use a functional approach to programming as more visible and understandable at first. Thus, the main actions are implemented in functions.

At the output of the function collect_news we get a dictionary to store characteristic data of all the news. Dictionary keys are general properties of the last found post (assume that properties of all posts are the same). Each key corresponds to a list. The length of the list is determined by the number of uploaded news posts (100 by default). Each list consists of lists, which store information on this key from one post. If for some post there is no such property, the value is [None]. Such logic is chosen to have the same objects (lists) in the list, and that is why each list of the key had the same size (we will need it for further creation of Data Frame).

The second function read_js is parsing the json format using the corresponding library.

You should pay attention that when you parse json it is assumed that the file format is correct. If it is not so, the function will return None and the code will break. This is a great opportunity to show your skills, username, and rewrite the code in such a way that it would work even when this exception occurs.

Let's examine request of information from the site in more detail. To prevent a ban - we use the request signature that is standard for an ordinary user. That's why we need the fake_useragent library. At first, with quite large intervals between requests (about 1 s, they can be randomized) and relatively small size of downloadable pages, this will be enough.

Then it's a small thing to save the obtained information as a matrix of "sign objects " for further use.

3. Transforming and saving data

The main part is written, it remains to accumulate data, for which the pandas library is perfect.

# Add data to exist df and save it

def log_write(feature, value=None):

# Write data to log file

f_log = open('/home/username/scripts/reddit_science_log.txt', 'a')

f_log.write(feature + value)

f_log.close()

# Start log file

log_write('', str(time.asctime()) + '\t')

len_old = 0

len_new = 0

try:

final_df_new = pd.DataFrame.from_dict(tidy_data, orient='columns')

final_df_old = pd.read_pickle('/home/username/scripts/science_reddit_df') # load

len_old = len(final_df_old.index)

# print 'old len: ', len_old, ' new df len: ', len(final_df_new.index)

final_df_old = final_df_old.append(final_df_new, ignore_index=True)

final_df_old.drop_duplicates(subset=['id'], inplace=True)

final_df = final_df_old.copy()

len_new = len(final_df.index)

# print 'final df len: ', len_new, '; len added: ', len_new-len_old

log_write('\t new news message: ', str(len_new - len_old))

except IOError:

# Create new file

final_df = pd.DataFrame.from_dict(tidy_data, orient='columns')

# Save df to file

final_df.to_pickle('/home/username/scripts/science_reddit_df') # save

Together with the check of database existence (science_reddit_df) we have prescribed a simple logging function log_write that will allow us to observe the behavior of our code. The type of file with logs:

Tue Jan 23 09:35:56 2018 new news message: 155

Tue Jan 23 09:39:21 2018 new news message: 0

Tue Jan 23 22:41:39 2018 new news message: 33

Wed Jan 24 09:23:09 2018 new news message: 11

I think it's pretty clear.

The logic of the science news database looks like this: get new information - check the possibility of open the save database - create a new Data Frame - merge two Data Frames - delete duplicated rows (by the id=news column) - save the updated database.

4. Building a word cloud

To generate a useful code output, I suggest building a cloud of the most frequently occurring words. To do this, you need to highlight relevant text data from the database and perform preliminary processing.

ind_all = final_df.index

all_text = final_df.loc[ind_all[-num_post:], ['title']]

tag_re = re.compile(r'(<!--.*?-->|<[^>]*>)')

# clear each post from tag and whitespace

all_text = [re.sub(' +',' ',tag_re.sub(' ', x[0])) for x in all_text.values]

list_in = [all_text]

list_out = ['']

for t in range(len(list_in)):

self_messages = list_in[t]

str_data = ' '.join(self_messages)

str_data = str_data.lower()

# clear from "bad" symbols and numbers

def checkGood(symb):

good1 = 'qwertyuiopasdfghjklzxcvbnm1234567890'.decode('utf-8')

good2 = u'qwertyuiopasdfghjklzxcvbnm1234567890'

if symb in good1:

return True

elif symb in good2:

return True

else:

return False

text = ''

for i in str_data:

if i == ' ' or i == '\n':

text += ' '

else:

if checkGood(i):

text += i

else:

text += ''

text = re.sub(' +',' ', text)

str_data = text[:]

# normalize of words

morph = pymorphy2.MorphAnalyzer()

text = ''

for i in str_data.split(' '):

p = morph.parse(i)[0]

text += p.normal_form + ' '

str_data = text[:]

# stop words check

stop_words = stopwords.words('english')

stop_words.extend([

u'new', u'study', u'may'

])

words = str_data.split(' ')

w_before = len(words)

words = [i for i in words if i not in stop_words]

w_after = len(words)

log_write('\t raw and tidy words: ', str([w_before, w_after]) + '\n')

str_data = ' '.join(words)

list_out[t] = str_data[:]

str_news = list_out[0]

gear_mask = np.array(Image.open("/home/username/scripts/gear2.png"))

wc = WordCloud(background_color="black", mask=gear_mask, collocations=False)

# generate word cloud

wc.generate(str_news)

def grey_color_func(word, font_size, position, orientation, random_state=None,

**kwargs):

return "hsl(0, 0%%, %d%%)" % random.randint(60, 100)

default_colors = wc.to_array()

wc2 = wc.recolor(color_func=grey_color_func, random_state=3)

# store to file

wc2.to_file("/home/username/scripts/science_reddit_raw.png")

The logic of the code is as follows: Selection of all headings from the database - clearing text from html tags and extra spaces - processing of all text in a cycle - removal of all that is not letter* - repeated removal of extra spaces - morphologization of words (set it to "normal" form) - removal of "stop" words (most frequently occurring, such as articles, pronouns, etc.) - output of one big line of words and spaces between them.

* - since Python 2.7 has certain encoding problems, we use two methods of encoding, just in case.

The resulting string can be used to build a word cloud using the wordcloud library. After building a cloud, we repaint it in the desired color (in my case - 50 shades of white, fig. 1). A beautifully designed cloud can be applied to a specific mask (image). In this case the mask should be binaryized (black and white format of two types of pixels: 0 and 255). For this purpose there are several sites that can be easily googled. I used this one: 3, the operation is called threshold.

Finally, a small gift for Ubuntu users (and maybe not just for them, if you think about it). The resulting image can be translated into an image with a transparent background by a simple terminal command:

convert ~/news_raw.png -transparent black ~/news_ready.png

In the same way, you can organize automatic parsing of the site through the Autostart application:

Menu — Startup Application — Add:

Name: reddit_science_reaser

Command: sh -c «sleep 600 && /FULL_PATH/science_reddit.py»

Set a delay for execution in seconds, so that the system could connect to the Internet and execute the script (for execution you can specify «python /FULL_PATH/science_reddit.py»).

5. Conclusion .

Let's sum it up a little. The main goal was to accumulate information about scientific news that appear on reddit.com. For this purpose, it was necessary to solve a number of tasks:

- get information from the site;

- parse information and select key properties for each news;

- save each news in a matrix of "object-features".

According to the written code, all tasks were solved and the goal was achieved. On our hard drive is a file science_reddit_df, which is constantly growing and accumulate information (every time you run the script). In addition, the task of using this information is solved (a cloud of the most frequently used words is built by news headlines).

5.1 Ways to improve (tasks for individual solving)

Any project is subject to optimization and update. I hope that my readers will also try to adapt the received information for themselves and their needs. So, at first glance, we can implement the following:

- write an error handler (checking values returned by functions);

- write text processing as a separate function;

- use an object-oriented approach (use classes for news);

- implement of html-parsing with

beautiful souplibrary; - write a user interface (GUI);

- rewrite the code on python 3.

Thank you for being with us and have a nice day!