Wordcloud of news or dialog from vk.com social network

Posted on 2018 / 08 / 12 in education

Greetings!

Let's do a job like parsing reddit.com. It is devoted to accumulating and analyzing a news feed or dialogues in vk.com social network. The obtained information is used to create a database. In my case there are 2 goals:

- to perform a one-time analysis of all my dialogues in the social network vk.com;

- to analyze 200 news posts from my news feed every time I turn on my computer.

The goals set include a set of tasks:

- to choose necessary libraries;

- to find addresses for parsing and understand its logic;

- to create a function for parsing the necessary information;

- to organize accumulation of information;

- offer a way of processing the received information and any useful result.

This theme is actual because of global distribution of algorithms of accumulation and analysis of big data. The received information can be used for surface analysis of own behavior and interests and also as an interesting avatar.

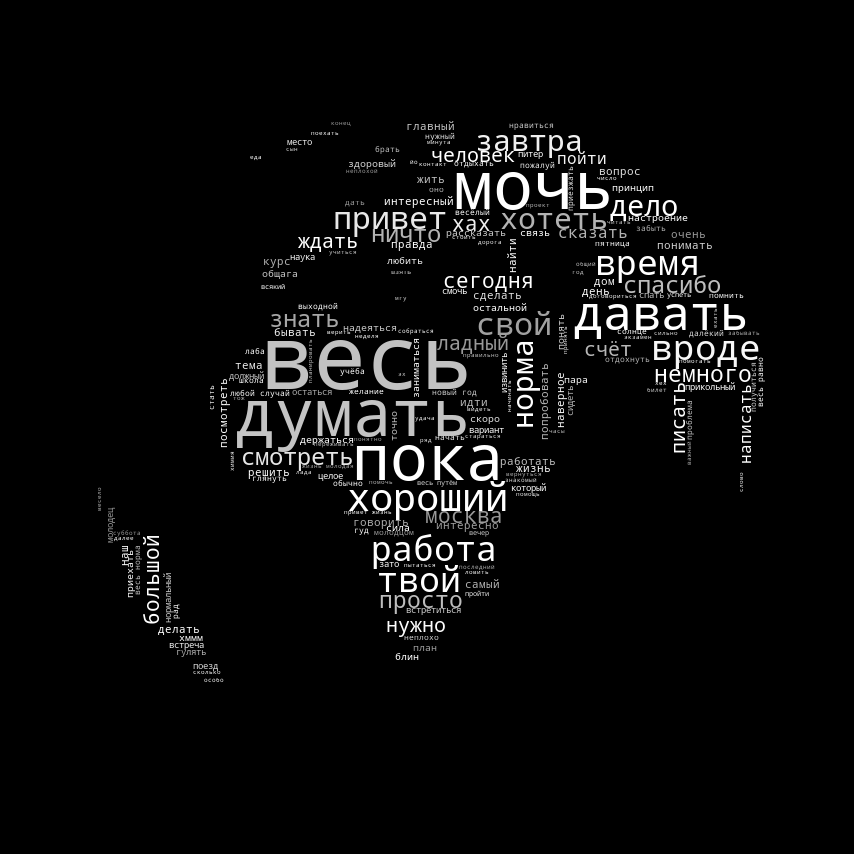

|

Fig. 1. Example of script result for my dialogues. |

This work was supported by personal interest and excellent documentation vk.com, and do not forget about Google.

I shall specify some features:

- the code is written, tested and works in Linux system Ubuntu 18.04 x64;

- Python 2.7 and vk API are used.

At the beginning of each chapter you will find the code with comments. Some explanations also will be provided if necessary. Thus, let's get down to business.

1. News feed analysis

1.1 Select of required libraries

#!/usr/bin/env python

# -*- coding: utf-8 -*-

from PIL import Image

from os import path

from wordcloud import WordCloud

import pymorphy2

import time

import re

import numpy as np

import random

import pandas as pd

from nltk.corpus import stopwords

from collections import Counter

import re

import vk

# -31480508 pikabu - You can see it as group id in browser bar

# -20629724 habrahabr

# -34274053 moscow

# -129636704 space (#ВКосмосе)

# -77270571 geektimes

# -46252034 naked science

# -227 MSU

The absence of libraries for requesting web information (request, fake_user) is caused by the presence of a special "language" of communication with vk.com - API and its framework for python (i.e. adapted library).

As a useful action it is proposed to build cloud of the most frequently occurring words - for this purpose you also need python libraries (wordcloud, numpy), including for work with text (nltk, collection, pymorphy). Logic of work: you need to get information from the vk.com (through API), process it, add it to the database, save the database on disk, calculate the frequency of words in the database, build a cloud of words (useful result).

It should be pointed out that this code can be run directly from the command line without specifying an interpreter (because of #!/usr/bin/env python line). The second part of the header is necessary for correct work with the utf-8 and unicode (# -*- coding: utf-8 -*-).

1.2 Initializing API

To work with the API, we will need access, which is provided by vk.com. The token (a long and unique set of numbers and letters) is responsible for access. We will also need to initialize the session.

access_token = "you_token_hear"

session = vk.Session(access_token=access_token)

vkapi = vk.API(session, v='5.71')

Note that you need to pay attention to the version of the API and what commands are present or changed in it (but this is on the future or if something breaks). And while it works - do not touch).

You can get a token by registering the application in the VK and sending a specific request in the browser address bar. You can find more information about this in official documentation. It is important to pay attention to the token validity period, user ID and access rights for the application.

Be careful! Do not allow other people's applications to access your personal data!

1.3 Take information and make logs

def log_write(feature, value=None):

'''

:param feature: name of logging feature

:param value: the value of logging feature

:return: None

'''

# Write data to log file

f_log = open('/home/username/scripts/vk_log.txt', 'a')

f_log.write(feature + value)

f_log.close()

def getLikes(user_id, cnt, vkapi):

'''

:param user_id: vk user id

:param cnt: number of post in thousand

:param vkapi: vk API object

:return: posts data dictionary

'''

subscriptions_list = vkapi.users.getSubscriptions(user_id=user_id,extended=0)['groups']['items']

# we form the list of id, which needs to be passed in the following method

groups_list = ['-' + str(x) for x in subscriptions_list]

# we form newsfeed

all_newsfeeds = []

newsfeed = None

for c in range(cnt):

if c == 0:

kwargs = {

'filters': 'post',

'source_ids': ', '.join(groups_list),

'count': 100,

}

newsfeed = vkapi.newsfeed.get(**kwargs)

else:

next_from = newsfeed['next_from']

kwargs = {

'start_from': next_from,

'filters': 'post',

'source_ids': ', '.join(groups_list),

'count': 100,

}

newsfeed = vkapi.newsfeed.get(**kwargs)

all_newsfeeds.append(newsfeed['items'])

time.sleep(1)

# Process all news with its keys (json structure)

all_keys = []

for portion in all_newsfeeds: # portion of news (100)

for post in portion: # each news in portion

for key in post:

if key not in all_keys: all_keys.append(key)

# set output dictionary structure

post_data = {}

for key in all_keys:

post_data[key] = []

# Collect data from newsfeeds

for portion in all_newsfeeds:

for post in portion:

try:

for key in post_data:

if key in post:

post_data[key] += [post[key]]

elif key not in post:

post_data[key] += ['None']

except KeyError as var:

pass

return post_data

Thus, we wrote a function to get information about the last cnt*100 posts of %%username. And we made up a simple function for logging to know the status of program execution.

Now it's a small thing to save the obtained information in the form of a matrix of "object-features" and process the texts.

1.4 Working with functions and accumulate the information

# Start log file

log_write('', str(time.asctime()) + '\t')

user_id = # your id (int)

all_data = getLikes(user_id, 2, vkapi) # Scan 2 * 100 = 200 news posts

log_write('\t message download: ', str(len(all_data['text'])))

# If need human-readable data

# import datetime

# for data in all_data['date']:

# print datetime.datetime.fromtimestamp(

# int(data)

# ).strftime('%Y-%m-%d %H:%M:%S')

# Add data to exist df and save it

len_old = 0

len_new = 0

try:

final_df_new = pd.DataFrame.from_dict(all_data, orient='columns')

final_df_old = pd.read_pickle('/home/username/scripts/vk_news_df')

len_old = len(final_df_old.index)

final_df_old = final_df_old.append(final_df_new, ignore_index=True)

final_df_old.drop_duplicates(subset=['post_id'], inplace=True)

final_df = final_df_old.copy()

len_new = len(final_df.index)

# write number of new mwssage into log file

log_write('\t new news message: ', str(len_new - len_old))

except IOError:

# Create new file

final_df = pd.DataFrame.from_dict(all_data, orient='columns')

log_write('\t new news message: ', str(len(final_df.index)))

# Save df to file

final_df.to_pickle('/home/username/scripts/vk_news_df')

The logic of the work on creation and replenishment of the database is similar to the article about scientific news: get new information - check the possibility of open the save database - create a new Data Frame - merge two Data Frames - delete duplicated rows (by the id=news column) - save the updated database.

1.5 Processing and output of information

We are moving to the final stage - processing and output of information. We will process the last 200 news posts and output a cloud of the most frequently occurring words.

news_to_process = 200

ind_all = final_df.index

all_text = final_df.loc[ind_all[-news_to_process:], ['text']]

tag_re = re.compile(r'(<!--.*?-->|<[^>]*>)')

# Remove well-formed tags, fixing mistakes by legitimate users

# clear each post from tag and whitespaces

all_text = [re.sub(' +',' ',tag_re.sub(' ', x[0])) for x in all_text.values]

list_in = [all_text]

list_out = ['']

for t in range(len(list_in)):

self_messages = list_in[t]

str_data = ' '.join(self_messages)

str_data = str_data.lower()

def checkGood(symb):

good1 = 'ёйцукенгшщзхъэждлорпавыфячсмитьбю'.decode('utf-8')

good2 = u'ёйцукенгшщзхъэждлорпавыфячсмитьбю'

if symb in good1:

return True

elif symb in good2:

return True

else:

return False

text = ''

for i in str_data:

if i == ' ' or i == '\n':

text += ' '

else:

if checkGood(i):

text += i

else:

text += ''

text = re.sub(' +',' ', text)

str_data = text[:]

# Normalize of word form

morph = pymorphy2.MorphAnalyzer()

text = ''

for i in str_data.split(' '):

p = morph.parse(i)[0]

text += p.normal_form + ' '

str_data = text[:]

# Stop words check

stop_words = stopwords.words('russian')

stop_words.extend([u'что', u'это', u'так',

u'вот', u'быть', u'как',

u'в', u'—', u'к',

u'на', u'ок', u'кстати',

u'который', u'мочь', u'весь',

u'еще', u'также', u'свой',

u'ещё', u'самый', u'ул', u'комментарий',

u'английский', u'язык'])

words = str_data.split(' ')

w_before = len(words)

words = [i for i in words if i not in stop_words]

w_after = len(words)

log_write('\t raw and tidy words: ', str([w_before, w_after]) + '\n')

str_data = ' '.join(words)

list_out[t] = str_data[:]

str_news = list_out[0]

# Create wordcloud

alice_mask = np.array(Image.open("/home/username/scripts/gear2.png"))

wc = WordCloud(background_color="white", mask=alice_mask, collocations=False)

wc.generate(str_news)

def grey_color_func(word, font_size, position, orientation, random_state=None, **kwargs):

#50 shades of white

return "hsl(0, 0%%, %d%%)" % random.randint(60, 100)

default_colors = wc.to_array()

wc2 = wc.recolor(color_func=grey_color_func, random_state=3)

# store to file

wc2.to_file("/home/username/conky/scripts/news_raw.png")

The logic of the code is as follows: Selection of all headings from the database - clearing text from html tags and extra spaces - processing of all text in a cycle - removal of all that is not letter* - repeated removal of extra spaces - morphologization of words (set it to "normal" form) - removal of "stop" words (most frequently occurring, such as articles, pronouns, etc.) - output of one big line of words and spaces between them.

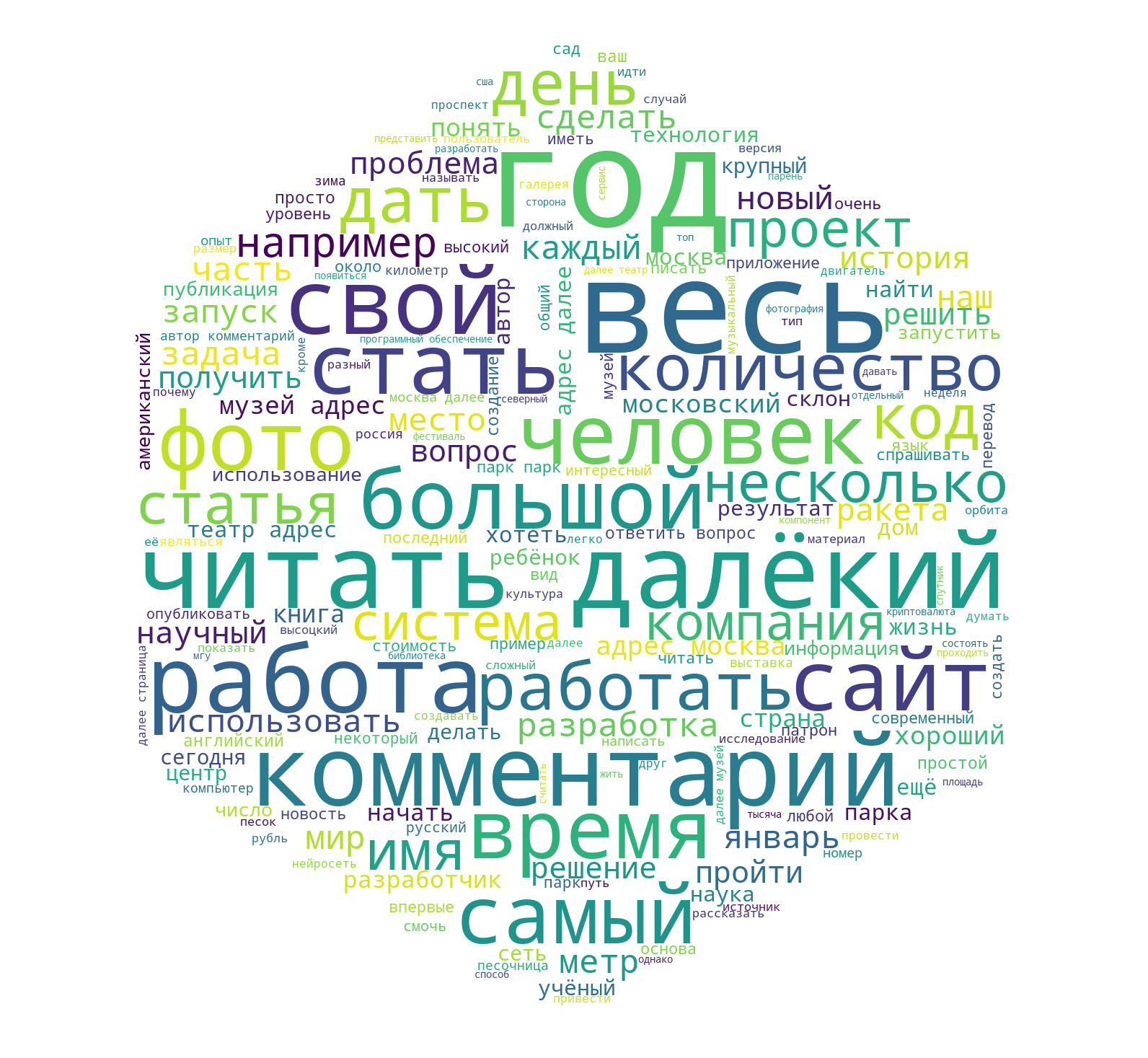

|

Fig. 2. Example of the news analysis. |

Finally, a small gift for Ubuntu users (and maybe not just for them, if you think about it). The resulting image can be translated into an image with a transparent background by a simple terminal command:

convert ~/news_raw.png -transparent black ~/news_ready.png

In the same way, you can organize automatic parsing of the site through the Autostart application:

Menu — Startup Application — Add:

Name: vk_news_reader

Command: sh -c «sleep 600 && /FULL_PATH/vk_news.py»

Set a delay for execution in seconds, so that the system could connect to the Internet and execute the script (for execution you can specify «python /FULL_PATH/vk_news.py»).

2. Dialog analysis at vk.com

I think we've dealt with the first part. Now let's move on to dialogue analysis. The general essence remains the same: vk API for information extraction - saving information on the hard disk - processing. All the logic and ideology remains the same, so I will give you the full code without any unnecessary explanations.

import numpy as np

import scipy

import nltk

import pandas as pd

import seaborn

import matplotlib as mpl

import matplotlib.pyplot as plt

from PIL import Image

from os import path

from wordcloud import WordCloud

import pymorphy2

import time

import re

import vk_log

import pickle

from collections import Counter

session = vk.Session(access_token='you_token')

vkapi = vk.API(session)

friends = vkapi('friends.get') # take list of all friends of user

# friends = [1111111, 2222222, 33333333] # also, we can manual set list of friends use they ID

def get_dialogs(user_id):

# Get dialog with user

dialogs = vkapi('messages.getDialogs', user_id=user_id)

return dialogs

def get_history(friends, sleep_time=0.4):

# Get all dialogues history

all_history = []

i = 0

for friend in friends:

friend_dialog = get_dialogs(friend)

time.sleep(sleep_time)

dialog_len = friend_dialog[0]

friend_history = []

if dialog_len > 200:

# vk API condition: len <= 200

resid = dialog_len

offset = 0

while resid > 0:

friend_history += vkapi('messages.getHistory',

user_id=friend,

count=200,

offset=offset)

time.sleep(sleep_time)

resid -= 200

offset += 200

if resid > 0:

print('--processing ', friend, ': ', resid,

' of ', dialog_len, ' messages left')

all_history += friend_history

i +=1

print('processed ', i, ' friends of ', len(friends))

return all_history

all_history = get_history(friends)

# Save or load data

pickle.dump(all_history, open("all_vk_history.p", "wb"))

# all_history = pickle.load(open("all_vk_history.p", "rb"))

interesting_id = user_id # set interesting user id

def get_messages_for_user(data, user_id):

# Extract all message for set user

user_messages = []

my_messages = []

for dialog in data:

if type(dialog) == dict:

if dialog['from_id'] == user_id:

m_text = re.sub("<br>", " ", dialog['body'])

user_messages.append(m_text)

elif dialog['from_id'] == YOUR_ID:

m_text = re.sub("<br>", " ", dialog['body'])

my_messages.append(m_text)

print 'Extracted', len(user_messages), ' user messages in total'

print 'Extracted', len(my_messages), ' my messages in total'

return user_messages, my_messages

user_messages, my_messages = get_messages_for_user(all_history, interesting_id)

list_in = [user_messages, my_messages]

list_out = ['','']

for t in range(len(list_in)):

self_messages = list_in[t]

str_data = ' '.join(self_messages)

str_data = str_data.lower()

def checkGood(symb):

good1 = 'ёйцукенгшщзхъэждлорпавыфячсмитьбю'.decode('utf-8')

good2 = u'ёйцукенгшщзхъэждлорпавыфячсмитьбю'

if symb in good1:

return True

elif symb in good2:

return True

else:

return False

text = ''

for i in str_data:

if i == ' ' or i == '\n':

text += ' '

else:

if checkGood(i):

text += i

else:

text += ''

str_data = text[:]

morph = pymorphy2.MorphAnalyzer()

text = ''

for i in str_data.split(' '):

p = morph.parse(i)[0]

text += p.normal_form + ' '

str_data = text[:]

# Stop words check

from nltk.corpus import stopwords

stop_words = stopwords.words('russian')

stop_words.extend([u'что', u'это', u'так', u'вот', u'быть', u'как', u'в', u'—', u'к', u'на', u'ок', u'кстати',

u'ещё', u'вообще', u'мб', u'чтоть', u'весь'])

words = str_data.split(' ')

words = [i for i in words if i not in stop_words]

str_data = ' '.join(words)

list_out[t] = str_data

print len(str_data.split(' '))

str_user = list_out[0]

str_my = list_out[1]

print 'Dict strong user: ', len(set(str_lisa.split(' '))) / float(len(str_user.split(' '))) * 100.0

print 'Dict strong my: ', len(set(str_my.split(' '))) / float(len(str_my.split(' '))) * 100.0

alice_mask = np.array(Image.open("IMAGE.jpg"))

wc = WordCloud(background_color="black", mask=alice_mask)

# generate word cloud

wc.generate(str_lisa)

# store to file

wc.to_file("User.png")

alice_mask = np.array(Image.open("IMAGE.jpg"))

wc = WordCloud(background_color="black", mask=alice_mask)

# generate word cloud

wc.generate(str_my)

# store to file

wc.to_file("Me.png")

3. Conclusion.

I hope that as a result of reading you have skills of working with vk.com API, as well as with the processing of text information.

According to the presented code, all set tasks are solved and goals are achieved.

I hope that you will see ways to improve the shown solution and my solution will be useful for you. Thank you for being with us and have a nice day!